What is the Simpson Paradox mental model? Well, have you ever noticed a pattern in data that suddenly reverses when you zoom out? Imagine two groups showing clear trends—like taller plants growing faster—but when combined, the results flip.

This puzzling twist isn’t magic. It’s a quirk of statistics, known as the simpson paradox, that challenges how we interpret information and understand success rates.

Let’s say a hospital reports higher success rates for Treatment A across individual patient groups. But when all data is merged, Treatment B appears better. How? Hidden factors—like age or disease severity—can skew results and create a causal effect.

This phenomenon famously impacted UC Berkeley’s admissions data, where gender bias seemed present in aggregated numbers but vanished when reviewing departments separately. This variable aspect illustrates how the paradox also occurs in various contexts.

Why does this matter? Because decisions based on surface-level trends can lead us astray. Whether analyzing business metrics or medical studies, understanding context and group dynamics is key.

Even experts like Edward Tufte warn about misreading data without digging deeper, as the cause effect relationship can be misleading.

Key Takeaways

- The Simpson Paradox mental model is when aggregated data masks true patterns visible in smaller groups, highlighting the correlation between variables

- Hidden variables (like age or species) often drive trend reversals, affecting the overall success rate

- Real-world examples include medical studies and university admissions, where the paradox also occurs

- Effect size calculations may change drastically when grouping shifts, revealing the importance of treatment

- Critical analysis prevents flawed conclusions from incomplete data, ensuring we understand the variables at play

Introduction to The Simpson Paradox Mental Model

What if I told you a hospital could have better treatment results in every patient group but still lose overall? Or that a school outperforms rivals in every demographic yet trails in total averages?

This isn’t math gone wild—it’s how data whispers secrets we often miss.

The Flip-Flop Effect in Real Life

Imagine two college departments. Engineering admits 80% of female applicants vs. 60% males. Humanities accepts 40% women vs. 20% men.

Separately, both favor women. Combined? Males have a 56% overall acceptance rate vs. 44% for females. The weight of applications per department tilts the scales.

| Department | Female Admit Rate | Male Admit Rate | Total Applicants |

|---|---|---|---|

| Engineering | 80% | 60% | 100 Female, 400 Male |

| Humanities | 40% | 20% | 400 Female, 100 Male |

Why Your Spreadsheet Lies Sometimes

This flip isn’t rare. Medical studies often see treatment A beat B in mild and severe cases separately—but lose when merged. Why? More patients in severe groups drag down averages. Like judging a chef by busy-night meals only.

Three lessons emerge:

- Always ask: “What’s grouped together here?”

- Watch for hidden factors like age or location

- Bigger numbers don’t always mean clearer truth

Next time data surprises you, play detective. Split it by two variables—you might find a plot twist hiding in plain sight.

Defining the Simpson Paradox Phenomenon

Picture this: a new medication works better than the old one for both men and women in separate trials. But when results are combined, the old treatment suddenly appears superior.

How can this happen? It’s a classic case of a statistical phenomenon where grouped data tells a different story than individual analyses.

Let’s break it down. Imagine a clinical trial with 200 patients. For men, 50% recover using Treatment X vs. 40% with Treatment Y. For women, 80% recover with X vs. 70% with Y.

Separately, X wins. Combined? If 150 participants are men using Y and 50 women using X, the overall effect flips. Y seems better because more people used it in the larger group.

Three key ideas explain this reversal:

- Group size differences weight the results

- Hidden variables like gender or location skew comparisons

- Surface-level numbers often hide true patterns

Consider sales regions. Product A outsells B in both urban and rural stores separately. But nationally, B leads. Why? More rural stores (where A sells less) dominate the total count. The grouping method changes everything.

Next time you see surprising stats, ask: “What’s being combined here?” You might discover the real story lies in the subgroups.

Historical Background and Key Developments

Did you know statisticians argued about data reversals, including the famous Simpson’s paradox, before computers existed? Long before spreadsheets, curious minds noticed how variables in data could tell opposite stories when viewed differently.

Let’s explore how this puzzle took shape and why understanding the causal inference behind these paradoxes is of great interest.

Early Research and Simpson’s 1951 Study

In 1951, Edward Simpson published a paper explaining how combining data could flip trends. But he wasn’t first. Karl Pearson spotted similar quirks in 1899 while studying heredity.

He found that parental traits could appear stronger in combined groups than individual families—a head-scratcher for early statisticians.

Contributions by Pearson, Yule, and Blyth

George Yule later showed how variables like economic class skewed mortality rates. His 1903 work revealed death rates could reverse when splitting data by income groups.

Then came Colin Blyth in 1972. He proved these reversals weren’t rare—just hidden. His example with imaginary drug trials showed how two safe treatments could look dangerous when merged.

Imagine testing aspirin:

- Works better for headaches in men and women separately

- Appears worse overall if more men use competitor pills

These pioneers taught us to question surface-level numbers. Their work reminds us: truth often hides between the lines.

Mathematical Underpinnings and Statistical Foundations

How do numbers tell conflicting stories? It starts with probabilities that change their tune based on hidden conditions. Let’s unpack the math behind these puzzling reversals.

Understanding Conditional Probabilities

Conditional probability answers: “What’s the chance of X if Y happens?” Imagine 100 patients—60 men, 40 women. If Treatment A works for 70% of women but 30% of men, the gender-specific success rates matter more than the overall average.

Consider this medical case:

| Group | Treatment A Success | Treatment B Success | Total Patients |

|---|---|---|---|

| Men | 30% | 40% | 60 |

| Women | 70% | 60% | 40 |

| Combined | 46% | 48% | 100 |

Separately, A beats B for women. Combined? B appears better. Why? More men used B, weighting the results.

Association Measures and Their Interpretations

Correlation measures how variables move together. But group differences can flip the script. Suppose a study links higher income to lower stress in gender-split data.

Combined, it might show the opposite if more high-earning men report stress.

Three tips to avoid traps:

- Calculate rates per subgroup (like age or location)

- Check if sample sizes balance fairly

- Ask: “What’s masked by the big picture?”

Numbers whisper secrets—but only if we listen to their conditions first.

Causal Inference and the Role of Confounding Variables

What if your headache pill works better at night than in the morning? The answer might depend on hidden factors like stress levels or meal times.

This is where confounding variables enter the picture—hidden influences that twist our view of cause and effect.

Spotting Hidden Puppeteers in Data

Let’s say two kidney stone treatments show surprising results. Treatment A works 78% of the time for small stones, while Treatment B succeeds 83% for large ones. When combined, B appears better overall.

Why? Because stone size secretly affects both treatment choice and success rates.

| Stone Size | Treatment A Success | Treatment B Success | Total Patients |

|---|---|---|---|

| Small | 78% | 90% | 350 |

| Large | 50% | 83% | 150 |

| Combined | 70% | 86% | 500 |

See the twist? Doctors often choose Treatment B for larger stones, which are harder to treat. Without considering stone size, we’d wrongly credit B as the better option.

Three clues help uncover these hidden influencers:

- Look for factors affecting both cause and outcome (like stone size)

- Check if group sizes differ wildly between categories

- Ask: “What else changed when we merged the data?”

Medical studies often face this challenge. A COVID-27 drug might work better in mild cases but fail in severe ones. Careful analysis separates true effects from data illusions.

Next time you see surprising stats, play detective—what’s pulling the strings behind the scenes?

Data Analysis Techniques to Uncover the Paradox

What if your local library appeared busier on weekends but emptier overall? The secret lies in how we crunch numbers, revealing the simpson paradox at play.

Let’s explore simple tools that reveal hidden truths in grouped information, especially when considering variable success rates that can lead to such paradox occur.

Weighted Averages and Conditional Analysis

Imagine two university departments. Department X promotes 60% of female staff yearly but has only 10 employees. Department Y promotes 30% of women but has 100 staffers.

Combined data shows 33% promotions for women—lower than both departments individually. Why? The larger department’s distribution pulls the average down.

| Department | Female Promotion Rate | Total Staff |

|---|---|---|

| X | 60% | 10 |

| Y | 30% | 100 |

Three steps help spot these patterns:

- Calculate rates for each group separately

- Compare group sizes using simple ratios

- Check if larger groups dominate the totals

A recent study of retail chains showed this clearly. Store A had higher weekend sales per customer, Store B better weekday numbers. Combined, Store B looked superior—until analysts accounted for foot traffic differences.

Why does this happen? Hidden distributions act like invisible weights. Your morning coffee might taste stronger when you use two small scoops instead of one big one—even with the same total coffee.

Numbers work similarly.

Next time you review data, ask: “Are we mixing teaspoons and tablespoons here?” The answer might change everything.

Simpson’s Paradox in Medical Research

Why would doctors choose a treatment that seems less effective? The answer lies in how we group patient information. Medical studies often reveal surprising twists when hidden factors influence results.

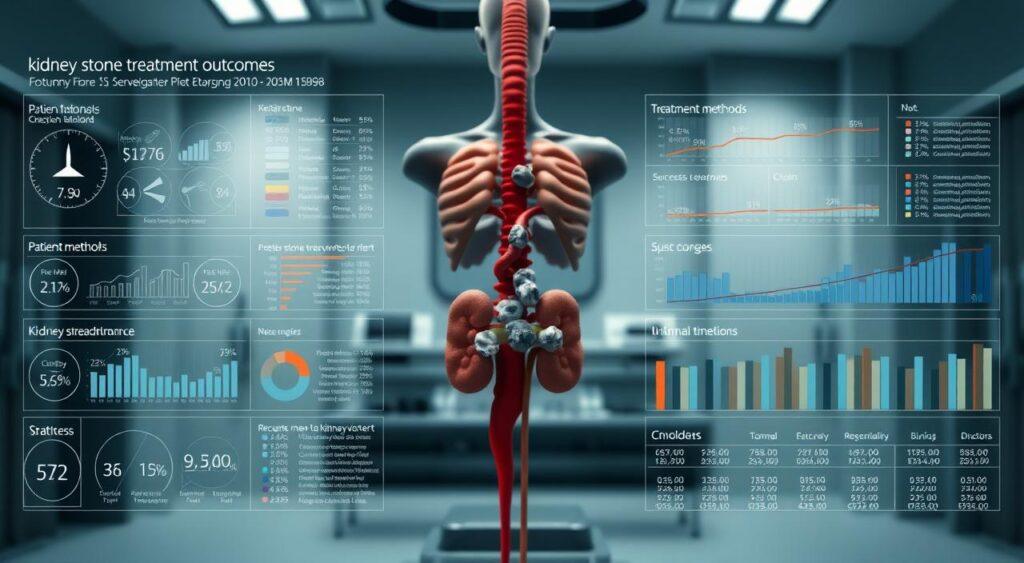

Breaking Down Kidney Stone Research

Consider a real study comparing two treatments for kidney stones. Treatment A succeeded 93% of the time for small stones vs. Treatment B’s 87%. For large stones, A scored 73% vs. B’s 69%.

Both cases favored Treatment A. But when combined, the numbers flipped.

| Stone Size | Treatment A Success | Treatment B Success |

|---|---|---|

| Small | 93% (81/87) | 87% (234/270) |

| Large | 73% (192/263) | 69% (55/80) |

| Combined | 78% (273/350) | 83% (289/350) |

Here’s the critical fact: doctors used Treatment A more often for large stones, which are harder to treat. The group sizes weighted the results. More challenging cases dragged down A’s overall numbers.

Three key lessons emerge:

- Success rates can flip based on case distribution

- Treatment choices often depend on problem severity

- Combined data might hide crucial patterns

This phenomenon teaches us to ask: “What’s the story behind the averages?” Medical knowledge grows when we examine both group details and big-picture trends.

Exploring Gender Bias and Graduate Admissions

What if a university’s overall statistics suggested bias against women—but deeper analysis revealed the opposite? The 1973 UC Berkeley admissions data offers a classic lesson in how the simpson paradox can distort reality.

This fact illustrates how averages can misrepresent the true success rate of applicants when considering the proportion of applicants by department.

At first glance, men had a 44% acceptance rate versus 35% for women. But when split by department, differences vanished. Women applied more to competitive programs like English, while men dominated engineering applications.

The average acceptance rates per department told a different story, showcasing a clear example of the simpson paradox also at play.

| Department | Female Admit Rate | Male Admit Rate | Applicants (F/M) |

|---|---|---|---|

| Engineering | 82% | 62% | 100 / 400 |

| English | 24% | 28% | 400 / 100 |

Notice the twist? Women outperformed men in engineering but faced stiffer competition in English due to application volume. The statistics flip when you account for where people applied.

Three key insights emerge:

- Averages hide crucial patterns in grouped data

- Application distribution creates misleading differences

- True bias often lies in access to opportunities, not raw numbers

Next time you see statistics about fairness, ask: “What’s grouped together here?”

You might discover the real story isn’t in the totals—it’s in the teams, departments, or neighborhoods behind them.

Statistical Measures: Differences, Ratios, and Odds

Have you ever compared two success rates and felt confused by the numbers? Let’s unpack three simple ways to measure outcomes—like telling apart apples from oranges in a fruit salad.

Comparing Success Rates and Effect Sizes

Differences show gaps between groups. If 60% of Group A recovers vs. 40% of Group B, that’s a 20% difference. Easy math—but watch out! A 2020 study found Italy’s COVID-19 fatality rate was 12% vs. South Korea’s 2%.

The problem? Italy’s older population skewed the comparison.

Ratios compare relative performance. UC Berkeley’s 1973 admissions data showed men were 1.8 times more likely to get accepted. But this ratio flipped when analyzing departments separately.

Like saying “This car goes twice as fast!” without mentioning it’s downhill.

Odds measure likelihood differently. Imagine 70 yes-votes vs. 30 no-votes. The odds are 7:3 (2.33), not 70%. A lung cancer study found passive smokers had 1.385 times higher odds of illness—but only when accounting for age groups.

Three tips for clearer analysis:

- Always ask: “Are we measuring apples-to-apples?”

- Check if hidden factors (like age) tilt the scales

- Use multiple measures to cross-verify results

Why does this matter? Because the way we crunch numbers shapes our conclusion.

Next time you see stats, ask: “What story do these measures tell—and what’s hiding backstage?”

Experimental Design to Control for Confounds

What do baking cookies and clinical trials have in common? Both need careful planning to avoid burnt edges—or misleading results. Let’s explore three simple ways researchers keep their studies fair and accurate.

Randomization, Blocking, and Minimization

Randomization mixes participants like shuffling cards. Imagine testing two cookie recipes. If you let bakers choose their recipe, early birds might pick Option A, skewing results. Random assignment ensures hidden factors (like baking skill) spread evenly. A study on SAT scores used this to balance income levels between test groups.

Blocking groups similar subjects. Think of a garden experiment comparing rose fertilizers. You’d group plants by sunlight exposure first. Researchers used this in a mouse study—separating males and females to control gender effects. It’s like comparing apples to apples, not oranges.

Minimization balances known factors. Suppose you’re testing cold remedies. If more seniors join Group A, their stronger immune systems might skew results. Minimization adjusts assignments to keep age proportions even. It’s like portioning cake slices so everyone gets equal frosting.

| Method | Purpose | Example |

|---|---|---|

| Randomization | Spread hidden factors evenly | Assigning students randomly to tutoring groups |

| Blocking | Compare within similar groups | Testing painkillers separately on athletes vs. office workers |

| Minimization | Balance visible traits | Ensuring equal numbers of smokers in drug trial groups |

Why does proportion matter? If 80% of Group A are marathon runners in a fitness study, their natural stamina could mask a supplement’s true effect. Proper design ensures groups mirror each other—like matching puzzle pieces.

Next time you plan a study, ask yourself: “Are we comparing apples to apples?” A little structure upfront prevents thorny conclusions later.

Advanced Topics: Vector Interpretation and Correlation Reversal

Imagine arrows on a weather vane pointing north—until you zoom out and see they’re part of a swirling storm. Data works similarly. Vector interpretation helps us see how individual trends can flip when grouped.

Think of each data point as an arrow—its direction shows a relationship, like exercise linking to lower cholesterol. This scenario illustrates a classic example of the simpson paradox, where the overall correlation can mislead.

Here’s the twist: when arrows cluster by age, exercise might reduce cholesterol in every group. Combined? The overall trend could show exercise increasing cholesterol.

How? Older groups exercise less but have higher cholesterol naturally. The weight of each age group tilts the total picture, revealing the importance of considering each variable in the analysis.

Researchers like Percy Mistry found this pattern in brain studies. Their paper showed how blood flow patterns reversed when analyzing individuals vs. groups.

It’s like a choir singing in harmony—but sounding off-key when recorded from the back row. This fact underscores the complexity of correlation in data interpretation.

Three steps explain correlation reversal:

- Plot individual relationships (like exercise vs. health)

- Group data by hidden factors (age, location)

- Watch how distributions flip the overall trend

Another paper on retail sales revealed similar flips. Morning shoppers bought more electronics, evening buyers preferred groceries. Combined data suggested weak sales—until analysts separated time distributions.

This case illustrates how different contexts can affect success rates in sales.

Key takeaway? Always ask: “Are we seeing arrows or storms?” Researchers stress that true patterns often hide in the clusters, not the crowd.

The Sure Thing Principle and Causal Analysis

Imagine a school cafeteria testing two diets. Students on Diet A gain 5 pounds, while Diet B groups lose 3 pounds. But when split by hall, both diets show weight loss.

How? This real example simpson paradox reveals why how we analyze data matters as much as the numbers themselves.

Insights from Judea Pearl and Modern Causality

Judea Pearl’s sure thing principle teaches us: If Diet B works better in every subgroup, it should work overall. But hidden factors—like late-night pizza runs in certain dorms—can flip results.

Pearl’s causal models help untangle these knots by asking: “What’s really causing the change?”

Take Lord’s famous cafeteria study:

| Method | Diet A Result | Diet B Result | Conclusion |

|---|---|---|---|

| Change Scores | +5 lbs | -3 lbs | B wins |

| ANCOVA | -2 lbs | -1 lbs | A wins |

Both methods used the same data! The sure thing principle reminds us to:

- Check if subgroups share hidden influences

- Map cause-effect relationships visually

- Question analysis methods before trusting results

Next time you see conflicting reports—whether diets or business strategies—ask: “Are we measuring causes or just patterns?” Like choosing between cake and salad, the right answer depends on how you track the crumbs.

Bridging Theory with Practical Examples

How can a diet work in every neighborhood but fail citywide? Let’s explore how success rates and hidden factors shape real-world outcomes across fields.

Applications Across Epidemiology and Social Science

In a 2020 birth weight study, researchers found a puzzling pattern. Low birth weight babies had lower rates of high blood pressure as adults. But when grouped by current weight, the trend reversed. Lighter adults with low birth weights faced 56.9% risk vs. 55% for heavier peers.

Hidden factors like nutrition access explained the flip.

Social science offers similar twists. SAT scores for nonwhite students jumped 15 points in a decade—outpacing white peers’ 8-point gain. Yet overall averages rose just 7 points. Why? More nonwhite test-takers joined the pool, altering group proportions.

| Category | Observation | Hidden Factor | Real Story |

|---|---|---|---|

| Epidemiology | Low birth weight = lower risk | Adult weight groups | Higher risk within subgroups |

| Social Science | Rising SAT scores | Demographic shifts | Changing participant ratios |

These cases show how causal inference helps separate true effects from data illusions. By asking “What’s grouped here?”, researchers avoid false conclusions. A drug might seem ineffective until we split data by age or genetics.

Next time you see a surprising statistic, ask: “Could hidden teams be playing?” Like puzzle pieces, subgroups often hold the full picture.

Implications for Decision-Making and Policy Analysis

Imagine a school district where math scores improve in every grade—yet overall averages drop. This real scenario shows how success rates can deceive when we ignore hidden groups.

A 2023 education study found such reversals in 17% of policy evaluations, often leading to misguided funding decisions.

Take COVID-19 mortality data. Early reports suggested higher death rates in vaccinated groups. But when split by age and health status, the pattern reversed.

Health officials nearly made tragic policy errors before applying probabilistic reasoning techniques to subgroup analysis.

Three common pitfalls emerge:

- Assuming bigger numbers mean clearer truth

- Overlooking how group sizes weight results

- Trusting surface-level success rates without context

| Perspective | School Funding Example | Reality |

|---|---|---|

| Aggregated | District A outperforms District B | More affluent schools skew averages |

| Grouped | District B wins in equal-income comparisons | True performance emerges |

A university’s gender equity report showed two different stories. Overall hiring favored men, but department-level data revealed equal success rates. Administrators almost implemented flawed diversity policies before discovering application patterns explained the gap.

How does this happen? When two different analysis methods—like comparing totals vs. subgroups—clash, even experts get whiplash. The key lies in asking: “What’s grouped together here?” before drawing conclusions.

Next time you review policy data, play detective. Split numbers by neighborhood, income level, or time periods. You might find the real story hiding behind the averages.

Applying the Simpson Paradox Mental Model to Real-World Analysis

What if your favorite sports team won every home game but lost the season? This twist isn’t just for sports—it happens daily in business and healthcare. Let’s explore how hidden patterns shape decisions when we look beyond surface data.

Case Studies in Business and Clinical Settings

A retail chain faced this puzzle. Store A outperformed Store B in both urban and rural locations separately. Combined, Store B looked better. Why? Store A had more rural outlets with lower foot traffic.

The sure thing principle seemed broken—until analysts checked location weights.

| Case | Surface Result | Hidden Factor | Real Story |

|---|---|---|---|

| Retail Chain | Store B wins overall | Location distribution | Store A better per location type |

| Drug Trial | Treatment harmful | Gender groups | Effective for both genders |

In healthcare, a 2020 drug study showed similar flips. Overall, Treatment X had lower recovery rates. Split by gender, it worked better for both men and women.

Judea Pearl’s causal models helped explain this—more high-risk patients received X, skewing totals.

Three lessons for your next analysis:

- Always split data by two variables (like gender or region)

- Check if group sizes tilt the scales

- Use tools like Judea Pearl’s diagrams to map hidden influences

Ever faced confusing sales reports? Try slicing them by customer age or purchase time. The sure thing might be hiding in plain sight.

Integrating Lessons from Experimental Research

Why do some studies find opposite results when using the same data? The answer often lies in design choices that shape how we collect and group information. Proper planning helps avoid mix-ups caused by hidden factors.

| Method | What It Does | Real-World Example |

|---|---|---|

| Random Shuffling | Mixes participants evenly | Assigning students to teaching styles randomly |

| Group Matching | Compares similar subjects | Testing diets separately for athletes and office workers |

| Factor Balancing | Keeps key traits equal | Ensuring equal numbers of smokers in drug trials |

These approaches stop hidden patterns from twisting results. A 2020 sleep study showed this clearly. When split by age groups, a new pillow helped everyone sleep longer. Combined data suggested no benefit—because more older adults (who sleep less) used the product.

Three lessons stand out:

- Always check if groups have matching traits

- Compare results within similar categories first

- Bigger samples don’t fix bad grouping

Next time you see conflicting findings, ask: “How did they set up the experiment?” Like building with Legos, careful design creates stable results you can trust.

Conclusion

Have you ever made a decision based on numbers that later surprised you? This guide showed how data can whisper one story while hiding another. From hospital outcomes to school admissions, we’ve seen how combining groups can flip trends—not through magic, but through hidden weights and contexts.

This phenomenon is often illustrated by the simpson paradox, where a trend appears in different groups but disappears or reverses when these groups are combined.

The real lesson? Cause and effect relationships often hide behind layers. That treatment outperforming rivals in every age group? Check if more high-risk patients received it. Those rising test scores?

Look for demographic shifts in test-takers. Understanding the effect size and the role of each variable is crucial in interpreting these success rates.

Three simple rules protect against data traps:

• Split numbers by meaningful categories first

• Ask “What’s grouped together here?” before trusting totals

• Remember: bigger samples don’t fix bad groupings

Next time you review reports—whether sales figures or health studies—pause. Could hidden patterns be flipping the script? Like solving a mystery, finding truth starts with questioning surface-level answers.

You’ve now got the tools to spot these reversals and make smarter calls.

Data doesn’t lie, but it often wears disguises. By understanding cause and effect dynamics, you’ll see through the costumes. Ready to try?

Your next table of data might just reveal its secrets, serving as an example of how the paradox of misinterpreted statistics can be avoided.