Have you ever tried to change someone’s mind, only to watch them dig in deeper? This stubborn reaction isn’t just frustration—it’s a cognitive blind spot, the backfire effect mental model. When people face facts that clash with their beliefs, they often cling tighter to those ideas instead of updating them.

Researchers Nyhan and Reifler proved this in 2010. They showed climate change skeptics scientific data, but many doubled down on their skepticism. Why? Because our brains treat beliefs like armor—they shield us from feeling wrong.

This isn’t just about politics. Imagine a user struggling with a website feature. If you correct their mistake too bluntly, they might ignore your help or quit the site entirely.

Designers and communicators need to understand this quirk of human thinking. How can we share information without triggering resistance? The answer starts with empathy.

Key Takeaways

- The backfire effect mental model occurs when contradictory evidence strengthens existing beliefs.

- Famous studies, like Nyhan and Reifler’s 2010 research, show this pattern in real-world scenarios.

- UX designers and marketers must avoid triggering defensive reactions in users.

- Approaching disagreements with curiosity works better than confrontation.

- Understanding this model helps improve communication in both personal and professional settings.

Backfire Effect Mental Model in Everyday Life

Why do we stick to old ideas even when facts say otherwise? Our brains love shortcuts. These shortcuts—called cognitive biases—shape how we process information in psychology.

One common example? Cognitive dissonance. It’s that uneasy feeling when new evidence clashes with what we already believe, often leading to the backfire effect mental model where a person might reject the new information.

Defining Cognitive Dissonance

Imagine learning that your favorite snack has harmful ingredients. You might downplay the risks or seek “proof” it’s still safe. This mental tug-of-war is a prime example of cognitive dissonance in psychology.

Studies show people often ignore facts to avoid discomfort, and one number illustrates this: for instance, stakeholders might defend a flawed design choice because changing it feels like admitting failure, as discussed in this article.

How Conflicting Beliefs Influence Behavior

When beliefs collide with reality, people get creative. They’ll cherry-pick data, dismiss experts, or double down on old habits. Ever argue with a friend about a historical fact? Both sides might dig for numbers to “win,” even if it wastes time. Our minds crave consistency—even when it’s irrational.

| Situation | Common Reaction | Outcome |

|---|---|---|

| Debating vaccine safety | Focus on rare side effects | Reject mainstream evidence |

| Choosing unhealthy foods | Emphasize “moderation” benefits | Ignore nutritional studies |

| Receiving UX feedback | Blame users for “not trying” | Delay design improvements |

Isn’t it fascinating how we’ll bend reality to protect our self-image? Recognizing these patterns helps us communicate better—whether explaining climate data or redesigning a website. Next, let’s explore why these biases matter for decision-making.

Roots of The Backfire Effect Mental Model

Ever notice how correcting someone can make them defensive? Our brains work hard to protect what we think we know. When fresh facts clash with deep-rooted ideas, people often twist logic rather than change their minds, a phenomenon often discussed in psychology known as the backfire effect mental model.

This isn’t about being stubborn—it’s how our wiring handles threats to our worldview. This behavior is critical to understand, especially in the context of this article, as it helps to support the idea that our beliefs are closely tied to our identities.

Psychological Mechanisms Behind the Phenomenon

Three key forces drive this reaction. First, consistency bias makes us favor familiar ideas. Second, emotional investment in certain topics turns debates into personal battles. Third, we filter new information through existing beliefs like a sieve—keeping what fits, tossing what doesn’t.

Hot-button issues like health choices or tech debates often spark this. Imagine someone who believes “natural” remedies always work best.

Show them studies proving otherwise, and they might recall one success story to dismiss your data. Why? Their brain treats the belief as part of their identity.

| Situation | Psychological Response | Outcome |

|---|---|---|

| Debating health misinformation | Focus on anecdotal exceptions | Reject peer-reviewed research |

| Discussing political topics | Label opposing data as “fake news” | Strengthen party loyalty |

| Challenging tech misconceptions | Cite outdated expert opinions | Delay adopting safer practices |

Ever held onto a belief longer than you should’ve? That’s your mind fighting to avoid the discomfort of being wrong.

By understanding these cognitive bias patterns, we can share insights without making others feel attacked. Next time you face resistance, ask: “What makes this idea so important to them?”

Roots and Cognitive Dissonance

Ever found yourself defending a belief harder when someone questions it? That’s cognitive dissonance at work. Psychologist Leon Festinger discovered this tension in 1957.

He found that people hate holding two conflicting ideas. To ease the discomfort, they’ll often double down on their original stance rather than reconsider.

Festinger’s Theory: Backfire Effect Mental Model

Imagine supporting a political candidate. If a scandal breaks, fans might dismiss the news as “fake” instead of reevaluating their choice. Why? Admitting they were wrong threatens their self-image.

Festinger called this belief perseverance—clinging to preexisting beliefs even when evidence challenges them.

Confirmation bias teams up with dissonance like peanut butter and jelly. We seek information that matches our worldview and ignore what doesn’t.

A designer might ignore user complaints about a feature because changing it would mean admitting their first idea flopped.

| Scenario | Common Response | Result |

|---|---|---|

| Debating climate policies | Dismiss opposing studies | Strengthen original position |

| Loyalty to a brand | Ignore negative reviews | Repeat purchases |

| Receiving UX critiques | Blame “confused users” | Miss improvement chances |

Ever scrolled past facts that made you uneasy? That’s your brain shielding you from dissonance. Next time your beliefs get challenged, pause. Ask: “Am I resisting because this idea matters to who I am?”

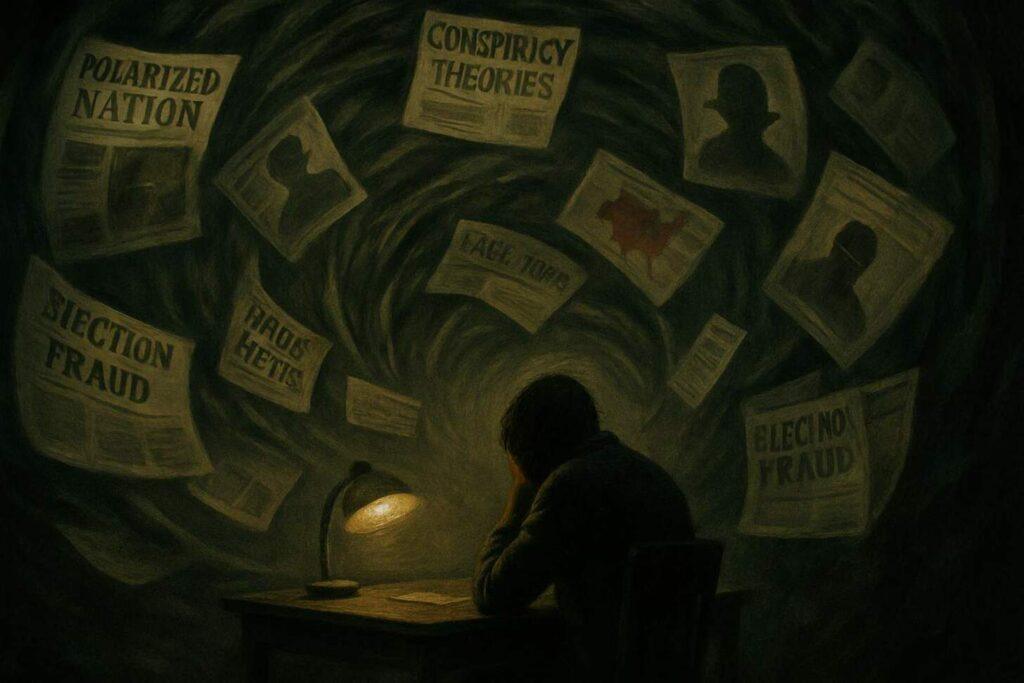

Political Misinformation and Self Protection

What happens when facts collide with political identity? Researchers Brendan Nyhan and Jason Reifler uncovered a surprising pattern in their 2010 study. They tested how people reacted to corrections of false claims—like myths about vaccines or Iraq War details.

Instead of updating their views, many participants strengthened their original beliefs. Why? Because facts threatening someone’s political tribe feel like personal attacks.

Study Insights: Nyhan and Reifler (2010)

The team presented subjects with news articles containing errors. When given corrections, conservatives and liberals both dug in—but doubled down hardest on issues tied to their values.

For example, correcting a false claim about tax cuts made supporters defend their party’s stance even more fiercely. It wasn’t about facts—it was about protecting who they were.

Role of Worldview in Reinforcing Beliefs

Political views often act like team jerseys. Challenging them feels like booing someone’s favorite player. This identity protection explains why debates about climate policy or healthcare get heated so fast. People aren’t just defending ideas—they’re guarding their sense of belonging.

Ever noticed how friends share articles that match their existing views? That’s confirmation bias teaming up with tribal loyalty. When new information clashes with group identity, brains prioritize fitting in over being right. It’s not stubbornness—it’s survival in social ecosystems.

How have you seen politics shape what people believe? Maybe during family dinners or online debates? Recognizing this psychology helps us discuss tough topics without triggering defensive behavior. After all, changing minds starts with respecting why those minds cling so tightly.

Climate Change Skeptics and Certain Beliefs

Have you ever debated climate science with someone who dismisses every chart you share? Studies reveal a curious pattern: 25% of skeptics become more certain of their views after seeing global warming evidence. It’s not about facts—it’s about how our brains process threatening information.

When Evidence Strengthens Doubt

Imagine showing someone data on melting Arctic ice. Instead of reconsidering, they might argue, “My town had a cold winter!” This reaction isn’t random.

Research shows people often use local experiences to dismiss broader trends. One 2023 study found skeptics who saw temperature records cherry-picked explanations like “natural cycles” to justify their stance.

How we share facts matters. Presenting stats through graphs? Some see it as “elitist.” Sharing stories about farmers adapting to droughts? That sparks more open conversations. The way information is framed can either bridge gaps or widen them.

| Situation | Common Response | Result |

|---|---|---|

| Sharing global temperature data | “This summer felt normal” | Dismiss scientific consensus |

| Discussing renewable energy | “Wind turbines kill birds” | Reject policy changes |

| Mentioning extreme weather | “We’ve always had storms” | Ignore climate linkages |

Why cling to beliefs against mounting proof? For many, environmental views tie to identity—like being a “practical realist” versus a “tree hugger.”

Challenging these labels feels personal. Next time you discuss climate issues, ask: “What values make this topic sensitive for them?”

Role of Confirmation Bias in Matching Beliefs

Ever stick to a favorite restaurant despite a bad meal? That’s confirmation bias in action—our tendency to seek information that matches what we already believe.

This mental shortcut shapes how we interpret everything from news stories to user feedback. When paired with the backfire effect mental model, it creates a stubborn loop: contradictory facts often make people cling tighter to their original views.

Linking Confirmation Bias to Defensive Thinking

Imagine a political candidate’s supporter. They’ll likely notice every positive poll (message) while dismissing criticism as “biased.” This selective focus isn’t accidental—it’s how brains protect cherished ideas. Designers face similar traps. A team might ignore user complaints about a feature because early testers loved it. The result? Stubbornness replaces progress.

Personal experience fuels this cycle. If someone believes “all apps crash,” they’ll remember every glitch but forget smooth sessions. In UX, a system built around assumptions (like “users prefer simplicity”) might overlook power users’ needs. Ever designed something you thought was perfect—only to discover users hated it?

How often do you cherry-pick facts that feel comfortable? Recognizing this bias helps us pause before dismissing feedback. Next time you encounter pushback, ask: “What am I filtering out to keep my worldview intact?”

The Backfire Effect in UX Design

Ever redesigned a website feature only to face user revolt? When updates clash with familiar routines, people often push back—even if the change improves functionality.

This friction isn’t about stubbornness; it’s a natural response rooted in psychology and the backfire effect to disrupted habits, where users feel their choices are being overridden. Experience shows that this effect can significantly impact user satisfaction.

Real-World Impact on Interface Changes

Snapchat’s 2018 redesign offers a classic example. The app moved Stories and chats to less intuitive tabs, triggering 1.2 million petition signatures demanding a reversal. Why? Users felt their navigation process was dismissed. Similarly, Facebook’s algorithm shift to prioritize family content in 2021 saw engagement drop as users missed updates from favorite pages.

| Platform | Change | User Reaction |

|---|---|---|

| Snapchat | Redesigned navigation | Mass petitions, usage decline |

| News Feed algorithm update | Complaints about missing content | |

| Gmail | Smart Compose rollout | Initial distrust of AI suggestions |

User Reactions and Design Considerations

How can designers avoid these pitfalls? Gradual rollouts help. When Gmail introduced Smart Compose, they allowed users to disable it—a decision that reduced backlash.

Clear messaging matters too. Explaining why a feature changed (“This helps you find friends faster”) builds trust better than silent updates.

Next time you tweak an interface, ask: “Does this respect how people interact with our product daily?” Involving users as part of the update process often turns critics into collaborators.

Strategies to Mitigate the Backfire Effect

How do you share tough truths without sparking resistance? The answer lies in balancing facts with emotional awareness. When people feel attacked, they armor up. But when they feel heard, they open up. Let’s explore two proven approaches that soften defensive reactions.

Empathetic Communication Techniques

Start by validating feelings before sharing facts. Instead of saying “You’re wrong,” try: “I see why that makes sense to you. Could we explore another angle?”

This approach reduces the role of ego in disagreements. Storytelling works wonders here—framing data within relatable narratives helps bypass resistance.

Imagine redesigning a checkout process. Users might hate changes initially. A message like “We noticed you might prefer the old layout—click here to switch back” builds trust. It shows respect for their comfort while gently introducing improvements.

Incremental Information Presentation

Dumping too much evidence at once overwhelms people. Break complex ideas into bite-sized pieces. For example, software updates often use progressive tutorials instead of massive overhaul announcements. This phenomenon of gradual learning matches how brains adapt to new patterns.

| Old Approach | Improved Strategy | Result |

|---|---|---|

| Forced feature changes | Opt-in beta testing | Higher user adoption |

| Technical jargon | Interactive explainers | Clearer understanding |

| Blunt error messages | Guided troubleshooting | Reduced frustration |

Ever tried adding one new habit at a time instead of ten? The same logic applies here. Small adjustments in how we present information can turn battles into breakthroughs. What subtle shift could you make today to encourage openness?

Case Studies and Real-World Examples

What happens when a familiar app suddenly feels foreign? Major platforms often learn the hard way that even well-intentioned updates can spark backlash. Let’s explore how sudden shifts in design and branding trigger unexpected reactions.

When Platforms Pivot Too Fast

Twitter’s 2023 rebrand to “X” offers a textbook example. Removing the iconic bird logo led to a 30% drop in App Store ratings within weeks. Users complained about lost brand recognition—like walking into a favorite café that changed its menu overnight.

Similarly, Facebook’s 2021 News Feed update prioritizing family content caused a 20% dip in page engagement. Creators felt their work was being hidden without explanation.

Redesigns That Tested User Loyalty

Gmail’s Smart Compose feature faced early resistance despite its AI-powered convenience. Initial rollout data showed 42% of users disabled it, fearing loss of control over their writing style.

Yet, by allowing opt-outs and gradual adoption, Google saw acceptance rise to 78% within six months. This mirrors how people adapt to new tools when given autonomy.

| Platform | Change | User Reaction | Outcome |

|---|---|---|---|

| Twitter/X | Logo & name removal | 1-star review surge | Brand trust decline |

| Algorithm shift | Creator complaints | Engagement drop | |

| Gmail | Smart Compose launch | Initial distrust | Gradual acceptance |

Ever disliked a website update so much you almost switched platforms? These cases show how attachment to familiar systems shapes our choices. By studying these patterns, designers can balance innovation with respect for user habits—turning potential friction into collaborative progress.

Impact on Decision-Making and Behavior

What if every fact you shared made someone more stubborn? This paradox shapes choices from grocery lists to corporate strategies. When beliefs harden against new information, people double down—even when logic suggests otherwise.

Impact on Personal Choices and Business Decisions

Daily decisions often mirror political debates. A parent might refuse vaccines despite pediatric advice, while a manager rejects data showing their project is failing. Both cases show how research on belief reinforcement applies beyond theory.

Companies face similar challenges. Imagine a team clinging to outdated software because “it’s always worked.” Resistance to upgrades can cost millions, yet leaders often blame employees instead of addressing psychological roadblocks.

| Situation | Personal Impact | Business Impact |

|---|---|---|

| Debating health choices | Ignore medical guidance | Higher insurance costs |

| Brand loyalty conflicts | Reject better alternatives | Miss market opportunities |

| Internal feedback | Defend flawed ideas | Stall innovation |

Ever pushed back against a policy change at work? That tug-of-war between habit and progress affects entire organizations. Teams that frame updates as collaborative improvements see 3x faster adoption than those using top-down mandates.

Understanding this behavior helps everyone—from parents negotiating bedtimes to CEOs steering mergers. Next time you face resistance, ask: “How can I present this as a shared solution rather than a correction?”

Conclusion

Why do heartfelt conversations sometimes push people further apart? When facts challenge deeply held beliefs, many instinctively defend their views rather than reconsider.

Studies show that direct corrections often strengthen misconceptions—like arguing about climate data only to see skepticism grow. This isn’t about logic; it’s about how our brains protect what feels true.

Recognizing these patterns changes everything. Whether discussing politics or redesigning apps, understanding why people resist helps us communicate better.

Instead of lecturing, try asking questions. Instead of proving points, share relatable stories. Small shifts in approach can turn debates into dialogues.

Think about your last disagreement. Did pushing harder help? Probably not. By framing new ideas as collaborative discoveries—not corrections—we support growth without triggering defenses. Next family debate or team meeting, pause. Ask: “What values might make this topic sensitive for them?”

Every mindful interaction chips away at stubborn beliefs—including our own.