Imagine trying to predict tomorrow’s weather using a map from 1920- Sounds impossible, right?. That’s the core idea behind the “all models are wrong” mental model. As statistician George Box famously said, “All models are wrong, but some are useful.” These tools simplify our messy world to help us make decisions—even though they’ll never capture every detail.

Take Einstein’s general theory of relativity. It reshaped physics, yet even this groundbreaking model breaks down near black holes. Climate forecasts and pandemic projections work the same way. They’re not perfect, but they guide us through uncertainty.

Why does this matter? Recognizing flaws keeps us humble. It pushes scientists to refine theories and helps everyday people avoid clinging to outdated ideas. A model’s real power lies in its ability to adapt—not in being “right.”

Key Takeaways

- All models are wrong mental model: Models simplify reality but can’t include every detail.

- Even the best frameworks, like Einstein’s relativity, have limits.

- George Box’s quote reminds us to focus on usefulness, not perfection.

- Updating models is crucial as new information emerges.

- Flawed tools still help navigate complex problems like climate change.

Understand the Imperfect World of Models

How do weather apps predict rain hours before the first drop falls? They use simplified versions of reality—like cartoon maps showing clouds and sun. Our brains crave these shortcuts because processing every raindrop’s path would overwhelm us.

Think of frameworks as cheat sheets for life’s toughest quizzes, illustrating how models can be wrong yet still aid people in understanding complex systems in the real world.

Why We Need Imperfect Tools

George Box, the clever statistician, compared models to streetlights. They don’t light up every corner, but they help us avoid potholes in our understanding of complex systems.

Take GPS navigation. It accounts for Einstein’s relativity to track satellites—yet ignores road closures caused by yesterday’s storm. Still, it gets you home faster 90% of the time, illustrating how models can be wrong while still being effective in the real world.

All Models Are Wrong Mental Model: Usefulness Over Perfection

Ever chosen a commute route using traffic apps? Those colored lines simplify complex patterns. They might miss a new construction zone, but they’re right often enough to trust. Box’s wisdom reminds us: “A tool doesn’t need to be flawless—just functional.”

| Model Purpose | What’s Included | What’s Ignored |

|---|---|---|

| GPS Navigation | Satellite positions, speed data | Real-time accidents, roadwork |

| Economic Forecasts | Interest rates, employment stats | Sudden political changes |

| Disease Spread | Infection rates, population density | Individual immune responses |

These examples show how creators pick which details matter. Like packing for vacation—you bring shoes but leave the shoe polish. The key? Update your suitcase when seasons change. Models work similarly, growing stronger when we feed them fresh data.

Historical and Conceptual Background

Centuries ago, astronomers sketched the heavens with simple models—little did they know their work would pave the way for Einstein. His 1915 theory of general relativity reshaped our understanding of the scientific system, predicting cosmic events like gravitational waves.

Yet even this masterpiece falters near black holes, proving that no mental model escapes imperfection, no matter the degree of detail we include or the things we choose to ignore in complex situations.

Evolution of Thought: From Stargazers to Supercomputers

Early scientists used wooden models of planets to explain orbits. Newton’s laws ruled for 200 years until Einstein showed their limits at light speeds. Today’s climate simulations build on these lessons, simplifying ocean currents into manageable data points. Each leap forward? A reminder that every theory is a stepping stone, not a final destination.

The Art of Leaving Things Out

Why do we strip details from complex systems? Imagine explaining rainbows to a child—you’d skip light refraction math and focus on colors. This “less is more” approach lets us tackle problems without drowning in data.

Medical researchers do this daily, using population-level trends as a science example to guide treatments while acknowledging individual variations and recognizing that mental models can sometimes be wrong.

| Scientific Era | Model Breakthrough | Omitted Factor |

|---|---|---|

| 17th Century | Newton’s Gravity | Relativistic effects |

| Early 1900s | Quantum Theory | Macscale applications |

| 21st Century | AI Predictions | Human intuition |

This pattern shows a vital truth: simplification isn’t weakness—it’s strategy. Just as painters leave blank spaces to highlight subjects, scientists focus on key variables.

The trick? Updating assumptions when fresh evidence emerges, like when gravitational wave detectors confirmed Einstein’s century-old predictions.

Real-World Applications and Examples

What do melting ice caps and hospital bed shortages have in common? Both rely on imperfect tools to shape life-changing choices.

This is a prime example of how mental models can sometimes lead people to make decisions based on simplified frameworks, guiding critical actions—even when missing pieces of the puzzle.

Climate Models: Uncertainty and Policy Impact

Scientists use climate projections like roadmaps for emission cuts. These simplified systems ignore local weather quirks but still predict rising sea levels. When the Paris Agreement set carbon targets, it leaned on these useful approximations. Coastal cities now build higher seawalls because models showed flood risks—despite not knowing exact timelines.

COVID-19: Navigating Uncertainty in Public Health Predictions

Remember when pandemic forecasts swung wildly? Early projections helped hospitals stock ventilators, though death estimates varied by thousands. Health officials used ranges, not exact numbers, to plan lockdowns.

This flexibility saved lives while admitting gaps in data, highlighting how even the best models can be wrong at times and the importance of mental models in understanding the situation. It’s crucial to consider what things might be missing in these predictions.

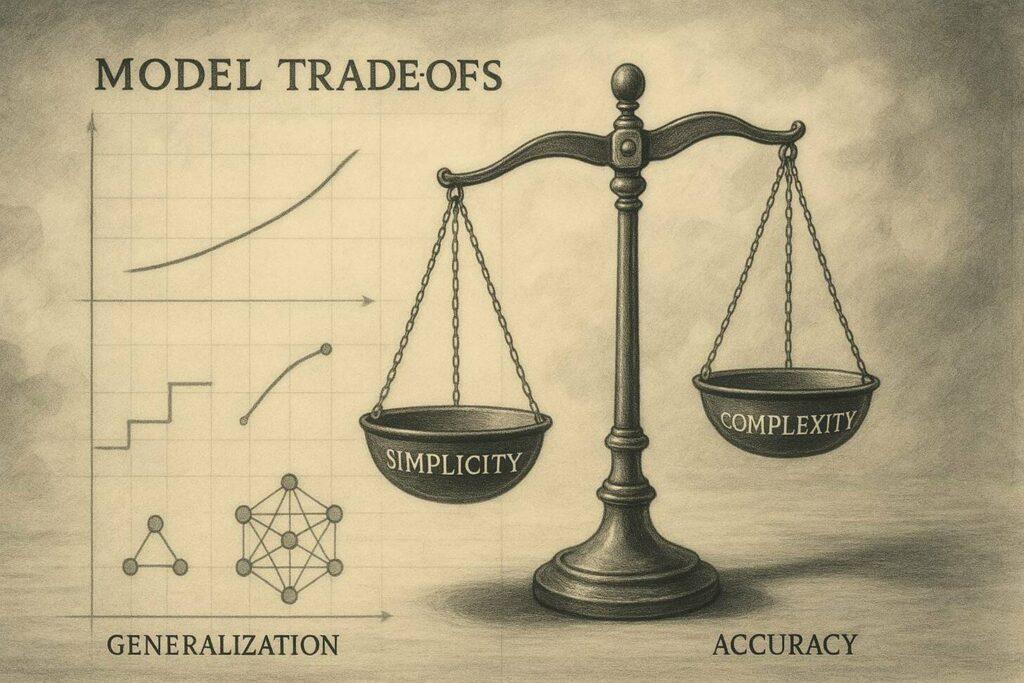

Financial and Healthcare Models: The Trade-Offs in Precision

In 2008, risk frameworks missed housing market cracks, contributing to $19.2 trillion in losses. Yet medical models succeed daily—drug trials predict side effects for most patients, though not everyone. Doctors use them like weather reports: prepare for rain, but keep sunglasses handy.

These examples share a lesson: better decisions come from asking “What’s missing?” Noticing a model’s blind spots helps us use its strengths wisely—like checking multiple weather apps before a picnic.

Benefits and Limitations of Using Models

Ever used a GPS that knows shortcuts but misses road closures? That’s the tightrope walk of using frameworks in decision-making. They shine in guiding choices—until unexpected potholes appear. The trick lies in balancing their strengths with awareness of blind spots.

Practical Utility versus Theoretical Flaws

Simplified tools help us act fast in a complicated world. Traffic apps ignore individual driving styles but still save time during rush hour. Economic forecasts skip sudden events like pandemics—yet help businesses plan budgets. These gaps don’t make frameworks useless, just incomplete.

Scientists combine multiple tools to reduce risks. Climate experts mix satellite data with ground observations. Doctors cross-check lab results with patient histories. This layered approach builds a safety net against single-model errors.

Risks of Oversimplification and Overcomplication

Ever tried following a recipe that skips key steps? Oversimplified frameworks create similar chaos. In 2008, financial tools ignored risky mortgages, fueling a crisis. Overly complex ones? Like packing every kitchen gadget for a camping trip—they become impractical.

| Benefit | Limitation | Real-World Example |

|---|---|---|

| Quick decisions | Misses rare events | Stock market crash predictions |

| Clear patterns | Ignores human behavior | Vaccine rollout timelines |

| Cost-effective | Struggles in extremes | Flood risk assessments |

Treat frameworks like a toolbox—use the right one for each job. Update them as conditions change, just like swapping winter tires for summer. Their power grows when we respect their limits while leveraging their insights.

Using the All Models are Wrong Mental Model in Decision Making

Ever tried following a recipe that didn’t account for altitude? Your cake might collapse—not because the recipe was bad, but because it needed tweaking for your kitchen. This everyday challenge mirrors how we should use mental models and frameworks in choices over time.

As George Box noted, tools become powerful when we treat them as works-in-progress rather than finished masterpieces, acknowledging that all models are wrong.

Strategies for Refining Models with New Data

Emergency teams use ensemble modeling during hurricanes—combining 20+ weather predictions. This “teamwork” approach balances out individual errors, much like asking three friends for movie recommendations. When COVID-19 hit, hospitals blended case data with regional trends to update bed forecasts weekly.

Try these practical steps:

- Cross-check tools: Use traffic apps and local radio reports

- Schedule “model check-ups”: Review assumptions every quarter

- Embrace ranges: Treat predictions as “30-50% chance” instead of absolutes

| Approach | Benefit | Real-World Use |

|---|---|---|

| Multi-model blending | Reduces single-source bias | Stock market crash predictions |

| Data-driven updates | Catches emerging patterns | Flu vaccine effectiveness tracking |

| Uncertainty ranges | Prevents overconfidence | Climate change impact timelines |

A financial firm avoided 2022 crypto losses by updating risk models monthly—not yearly. Their secret? Treating each market shift as new ingredients in that altitude-adjusted cake recipe. What outdated assumptions might be skewing your decisions?

Conclusion

Picture planning a road trip with a map that shows highways but not detours. This mirrors how frameworks shape our understanding—they highlight paths while hiding bumps.

As statistician George Box noted, even flawed models can be wrong but become powerful when we treat them as works-in-progress over time.

From Einstein’s relativity theory to climate projections, every model simplifies our complex world. They help hospitals prepare beds during pandemics and cities plan for rising seas.

Their strength lies not in perfection, but in adapting as new data emerges—like updating your playlist when fresh tracks drop.

Here’s the takeaway: Use these tools like a trusted compass, but pack a backup map. Check traffic apps against live reports. Compare financial forecasts with market trends. The best decisions blend model insights with real-world observations.

What imperfect map will guide your next big choice? Whether navigating career moves or weekend plans, remember: Useful approximations beat perfect paralysis. Keep refining, stay curious, and let practical wisdom light your way.