Imagine holding a treasure map that claims “X marks the spot.” You dig for hours—only to find rusty nails. That’s an example of the map is not the territory mental model. That’s the problem with simplified guides to reality.

Polish scientist Alfred Korzybski warned about this in 1931: “A representation of something is never the thing itself.” Just like old maps miss new roads, our brains often confuse shortcuts with truth.

Think of ideas as rough sketches. A weather app predicts rain, but you step outside to sunshine. Financial charts suggest stability—until markets crash. Why? Models simplify messy details to help us decide faster. But clinging to them blindly? That’s like using a 1990s roadmap for today’s city.

Ever bought something because reviews said “perfect,” then hated it? That’s your mind trusting a filtered version of reality. From NASA’s Mars rovers to urban traffic plans, we’ll explore how pros avoid this trap. Ready to spot when your brain’s shortcuts steer you wrong?

Key Takeaways

- The map is not the territory mental model: mental shortcuts help us decide quickly but often miss crucial details

- Alfred Korzybski’s famous warning reminds us models aren’t reality

- Outdated assumptions can lead to costly mistakes in finance and science

- Regular reality checks keep our thinking accurate and useful

- Real-world examples show why flexible thinking beats rigid rules

Intro: The Map is Not The Territory Mental Model

Ever followed GPS directions that led you to a dead end? That’s our first clue about representations versus actual landscapes. A paper chart shows highways and towns, but skips cracked sidewalks or blooming wildflowers nearby.

These simplified guides exist everywhere—from subway diagrams to how we imagine relationships work in our life. This example illustrates how our understanding of the map and territory can miss important things.

Defining Maps, Models, and Realities

Physical maps help navigate cities, while mental ones shape decisions. Think of a restaurant menu: photos show dishes, but leave out kitchen chaos or the chef’s secret ingredients. Similarly, models filter noise to highlight patterns.

Polish thinker Alfred Korzybski noted in 1931 that “the word ‘water’ isn’t wet”—a reminder that our understanding of the map and territory can’t capture full experiences, illustrating the concept that representations may not reflect the true way of reality.

| Map Features | Real-World Elements |

|---|---|

| Highway lines | Potholes, traffic flow |

| City symbols | Street performers, smells |

| Elevation marks | Wind patterns, animal trails |

Simplifications vs. the Full Spectrum of Reality

Why do weather forecasts sometimes fail? They use models that ignore microclimates near lakes or tall buildings. A hiking map won’t show loose rocks or sudden fog—yet we act surprised when reality adds twists.

Ever noticed how nutrition labels list percentages but not how your body absorbs nutrients? This disconnect between map and territory can lead to misunderstandings, as explanations often fall short of capturing the full course of reality.

Maps and models serve as starting points, not final answers. They’re like training wheels for thinking—helpful until you hit rough terrain.

Recognizing their limits helps us stay curious and adaptable, as coined by Alfred Korzybski, who emphasized the importance of understanding the sense of our representations.

Map vs Reality: Korzybski’s Contribution

Picture an engineer-turned-philosopher in 1931, scribbling ideas that would change how we understand reality. Alfred Korzybski, a Polish thinker, grew frustrated with how people confused words with actual experiences.

His work emerged from studying the limits of language and its relationship to map and territory during rapid technological shifts—a problem still relevant today.

The 1931 New Orleans Paper

At a science conference, Korzybski dropped a bombshell: “Whatever we say something is, it isn’t.” His paper argued that language and models act like blurry glasses—filtering details to create usable shortcuts. He compared financial charts to weather forecasts, noting both miss hidden variables like investor panic or sudden storms.

| Korzybski’s Concept | Real-World Example |

|---|---|

| Words ≠ Objects | Menu photos vs. actual meals |

| Models Ignore Complexity | Traffic apps missing road closures |

| Time Changes Context | 2010 economic models failing in 2020 |

Key Insights and Philosophical Implications

Korzybski’s biggest warning? All mental models become outdated. Think of nutrition labels—they list calories but skip how your gut processes them. His ideas force us to ask: When did we last update our assumptions about work, relationships, or success?

This philosophy isn’t just academic. It’s why software developers beta-test programs and doctors double-check AI diagnoses. Models guide us, but reality always has the final say. How many decisions today rely on yesterday’s mental shortcuts?

Risks in Finance and Risk Management

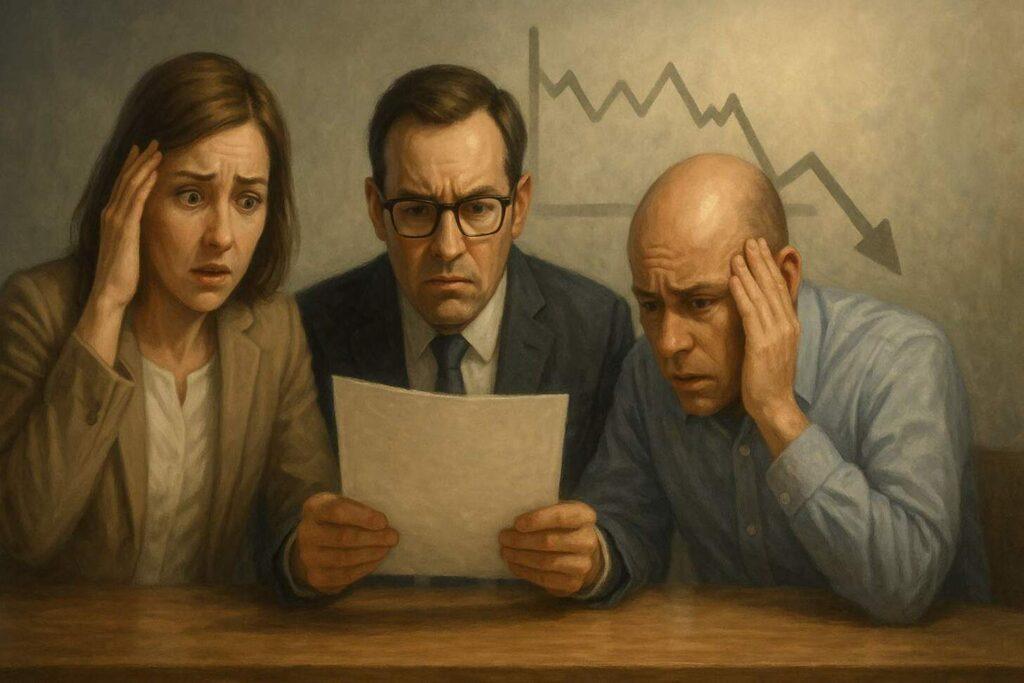

What happens when banks treat financial tools like crystal balls? In 2008, the world learned a brutal lesson: mathematical shortcuts can’t predict human chaos.

Risk managers relied on equations that assumed calm markets—until panic spread like wildfire, revealing the limitations of many models and the stark difference between a physical map and the complex structure of reality.

Financial Models and the 2008 Crisis

Wall Street’s playbook used Value-at-Risk formulas to estimate losses. These tools treated housing prices like stable math curves. But reality? Homeowners defaulted in waves, and models missed the domino effect. $19.2 trillion vanished globally as assumptions crashed into facts.

| Mental Model Assumptions | Real-World Behavior | Impact |

|---|---|---|

| Predictable defaults | Mass mortgage failures | Bank collapses |

| Steady liquidity | Frozen credit markets | Business shutdowns |

| Rational investors | Panic selling | Stock market plunge |

The Map is Not The Territory Mental Model: Irrationality

Nassim Nicholas Taleb calls these blind spots “Black Swans”—events models deem impossible until they strike. Why did 2008’s analysis fail? It ignored fear, greed, and herd mentality. Like using a recipe that skips salt, the math lacked flavor.

Testing assumptions matters. A 2023 paper found firms updating their risk frameworks monthly survived downturns better. Can we trust tools that can’t adapt? Only if we treat them as rough drafts, not rulebooks.

Learning from The Challenger Disaster

What happens when rocket scientists miss critical warnings? On January 28, 1986, the Space Shuttle Challenger exploded 73 seconds after liftoff. Seven astronauts perished because risk assessments ignored a crucial variable: freezing temperatures’ effect on rubber O-rings.

Engineers had flagged the issue, but decision-makers treated mathematical probabilities as absolute truth. This highlights the importance of understanding reality and the map territory in decision-making processes.

Incomplete Risk Models and Their Consequences

NASA’s models assumed O-rings would seal properly in cold weather. Reality? Ice formed overnight at 36°F—far below tested conditions. Like financial experts in 2008, space planners overlooked edge cases. Both scenarios show how “safe” calculations crumble when outliers strike.

| Model Assumptions | Actual Conditions |

|---|---|

| O-rings function above 53°F | Launch day: 36°F |

| No joint seal failures | Flames breached seals in seconds |

Why do smart teams make fatal errors? They confuse predictions with guarantees. A 1985 report warned about O-ring vulnerabilities, yet leaders dismissed it as “unlikely.” Sound familiar? Banks used similar logic before 2008’s crash.

Testing tools against fresh evidence saves lives. After Challenger, NASA redesigned joints and revised launch rules. But here’s the kicker: Every model has blind spots. What unseen risks lurk in your next big decision?

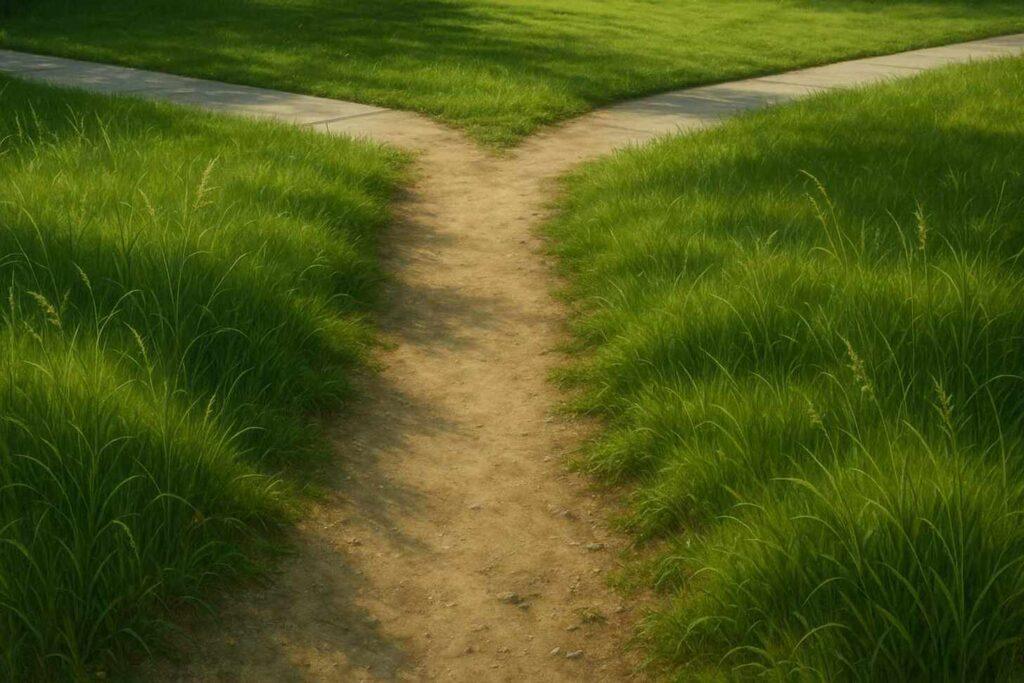

Mental Models and Desire Lines

Ever seen a dirt trail cutting through grass where sidewalks end? These unofficial routes—called desire paths—reveal how people reshape rigid designs. Urban planners draw neat walkways, but real behavior carves smarter shortcuts. Like our brains, cities evolve through trial and error.

When Plans Meet Reality

Robert Macfarlane describes desire paths as “the stories our feet tell.” Universities often wait to pave paths until students create dirt trails through lawns. Why? Human choices expose flaws in original layouts, providing an explanation of how we navigate the way we make decisions.

A park bench might look perfect on paper, but wind patterns or sun glare can make it unusable, highlighting the things we often overlook in our mental models.

| Planned Design | Actual Use |

|---|---|

| Curved walkways | Straight-line foot traffic |

| Central plaza seating | Shaded corners under trees |

| Designated bike lanes | Riders weaving through gaps |

These mismatches mirror how we handle daily choices. Ever skip steps in a recipe because “it works better”? That’s your mind creating a decision-making shortcut. Models help us start, but lived experience often rewrites the rules.

Smart cities now track desire paths to improve layouts. Helsinki’s planners used GPS data to redesign parks after studying where people actually walked. Adapting beats insisting on initial plans. How many meetings have you shortcutted because the agenda felt outdated?

Complexity thrives when we watch and adjust. Next time you see a dirt trail through grass, ask: What outdated assumptions am I still following?

The Map is Not The Territory Deep Dive

Remember when fitness apps first claimed 10,000 steps daily guaranteed health? Millions tracked their walks—until studies showed individual needs vary wildly. This gap between theory and truth reveals why testing frameworks matters. Models guide us, but reality often rewrites the rules.

Testing Mental Models Against Real-World Evidence

Tech startups use A/B tests to challenge assumptions. They might launch two app versions: one based on “expert” design principles, another shaped by user behavior. Data often reveals unexpected preferences—like bigger buttons outperforming sleek layouts. Nassim Nicholas Taleb warns: “A model might show you some risks, but not the risks of using it.”

Medical researchers update protocols through constant trials. When COVID-19 hit, initial treatment models assumed lung damage was the main threat. Later data revealed blood clotting risks, forcing revised approaches. This pivot saved lives by merging lab predictions with hospital realities.

| Model Testing Method | Outcome |

|---|---|

| Cross-checking weather forecasts with local sensors | Improved storm prediction accuracy by 37% |

| Comparing financial projections to market shifts | Reduced portfolio losses during 2022 downturn |

Why trust just one lens? Cities use traffic models alongside live camera feeds to manage congestion. Farmers blend soil data with generations-old planting wisdom. When was the last time you pressure-tested your go-to strategies?

Systematic checks prevent disasters. A 2023 study found teams reviewing assumptions quarterly made 23% fewer costly errors. Balance trust in tools with humble curiosity. After all, even the best map needs compass corrections.

Key Lessons from Cognitive Science

Have you ever assumed a coworker was angry because they didn’t smile? Our brains constantly fill gaps using patterns from past experiences. Cognitive scientists call these shortcuts schemas—mental blueprints that help us process information quickly. Like speed-dial buttons for thinking, they let us navigate daily life without overanalyzing every detail.

Cognitive Schemas, Heuristics, and Reality

Schemas organize knowledge: “Dogs bark” or “Rain means umbrellas.” Heuristics are decision rules—like choosing a restaurant with the longest line. Both save time but risk oversimplification. Imagine assuming all snakes are venomous after one scary encounter. Helpful? Maybe. Accurate? Not always.

| Mental Shortcut | Benefit | Limitation |

|---|---|---|

| Schema: “Clouds mean rain” | Quick weather prep | Misses sunny spells during overcast |

| Heuristic: “Expensive = quality” | Simplifies shopping | Overlooks affordable gems |

| Schema: “Quiet people are shy” | Social ease | Ignores cultural nuances |

Why do we stick with flawed shortcuts? Our brains conserve energy—processing every detail would overwhelm us. Studies show people make 35,000 decisions daily. Without schemas, we’d stall at choosing socks.

But watch for cracks in the blueprint. Ever heard “Follow your gut”? That heuristic works when picking ice cream flavors, not when evaluating stock markets. Sophisticated thinking blends instinct with data checks. When did you last verify your assumptions against fresh evidence?

Balancing speed and accuracy keeps our mental maps useful. Update your schemas like smartphone apps—regularly and with purpose.

Strategies for Smarter Decision Making

Ever bought shoes online that looked perfect in photos but pinched your toes? That gap between expectation and reality shows why flexible strategies matter. Smart choices require balancing confidence with curiosity—like a chef tasting dishes mid-recipe.

Embracing Probabilistic Thinking

Instead of yes/no answers, ask: “What’s the 70% likely outcome?” Investors using this approach diversify portfolios, knowing markets shift. A 2023 study found teams estimating probabilities reduced bad bets by 41%.

| Single-Model Approach | Multi-Model Strategy | Outcome |

|---|---|---|

| Trusting one weather app | Checking radar, humidity, and wind patterns | 92% better storm prep |

| Relying solely on financial charts | Adding sentiment analysis and historical data | Reduced 2022 portfolio losses by 33% |

Using Multiple Mental Models to Challenge Assumptions

Urban planners combine traffic models with camera feeds and citizen feedback. This mix revealed commuters avoiding a “perfect” bridge design—saving $2M in redesign costs.

Try these steps today:

- Label assumptions as “temporary drafts” needing updates

- Use three different decision-making frameworks for big choices

- Schedule monthly “reality checks” against fresh data

Ready to trade certainty for smarter adaptability? Your next decision could benefit from seeing models as flashlights—not fixed blueprints.

Industry Examples and Practical Applications

Ever wonder why bridges collapse despite perfect blueprints? Real-world challenges expose gaps between plans and outcomes. Let’s explore how professionals navigate this tension through two revealing examples.

Risk Analysis in Financial Engineering

In 2008, models predicted stable housing markets—until $19.2 trillion vanished globally. Banks relied on equations assuming predictable defaults, but reality delivered chaos. One major firm ignored warnings about model limitations, treating risk assessments as gospel rather than guides.

| Model Prediction | Actual Outcome |

|---|---|

| 3% default rate | 12% mortgage failures |

| Steady liquidity | Frozen credit markets |

Urban Behavior and the Creation of Desire Paths

Helsinki’s planners learned this lesson through foot traffic patterns. Designed walkways curved artistically, but residents carved straight dirt lines through grass. GPS data revealed 78% ignored the official design. The city later paved these organic routes, saving $1.4 million in redesign costs.

Key lessons emerge:

- Theory works until real people interact with systems

- Static models can’t predict evolving behaviors

- Successful design adapts to observed patterns

How often do we mistake paper plans for living systems? Regular testing and humility keep our work grounded in reality—not just projections.

Conclusion

Ever trusted a recipe that flopped despite perfect steps? This gap between plans and outcomes reveals why flexible thinking matters. Models guide decisions—like GPS suggesting routes—but reality often paints outside the lines.

Nassim Taleb’s warning rings true: “Don’t cross a river because it’s four feet deep on average.” From Wall Street’s 2008 crash to NASA’s Challenger tragedy, rigid frameworks failed when complexity struck. Updated assumptions and multi-angle checks prevent such pitfalls.

Try these reality tests:

- Treat favorite strategies as temporary drafts, not holy texts

- Blend data with lived experience—like chefs tasting soups mid-cook

- Schedule quarterly “model checkups” using fresh evidence

Curiosity beats certainty. Helsinki’s planners saved millions by paving paths walkers actually used. What outdated lines still shape your choices?

Share these insights with peers facing tough decisions. Life’s terrain shifts constantly—our maps need regular updates. Ready to redraw yours?