What happens when we treat life like a game with fixed rules? This question lies at the heart of the ludic fallacy mental model, a concept popularized by author and scholar Nassim Nicholas Taleb.

In his books The Black Swan and Antifragile, Taleb warns against oversimplifying real-world uncertainty into neat, game-like models. Games have clear rules—like chess or blackjack—but life? It’s messy, unpredictable, and full of surprises.

Taleb coined the term after observing how experts often ignore chaotic variables. For example, before the 2008 financial crisis, banks relied on Value at Risk models that treated markets like predictable games. These models failed spectacularly because they couldn’t account for rare, high-impact events—what Taleb calls “Black Swans.”

Think of it this way: In a casino, the odds are fixed. But in real life? A tiger might attack a performer (a real example from Taleb’s work), or a housing bubble might collapse.

As Taleb’s character Fat Tony often says, “The map is not the territory.” Simplified models create false confidence, blinding us to the chaos lurking beyond spreadsheets and theories.

Key Takeaways

- The ludic fallacy mental model describes mistaking game-like rules for real-world complexity.

- Nassim Taleb introduced the concept to explain failures in finance, science, and everyday decision-making.

- Games have fixed rules; life has unpredictable variables like rare “Black Swan” events.

- Overreliance on models like Value at Risk contributed to the 2008 financial crisis.

- Real-world risks require flexibility, not rigid formulas.

The Ludic Fallacy Mental Model Unveiled

Have you ever noticed how life doesn’t play by the rules? That’s the core idea behind treating reality like a predictable game. While board games and casinos operate with fixed probability systems, our actual experiences overflow with surprises no spreadsheet can contain.

Defining the Gamification Misconception

Gamification works for loyalty programs—not life choices. When we apply game rules to complex decisions, we ignore wildcards like sudden job losses or health crises.

Think of weather forecasts: they use probability models, but still get surprised by freak storms. In the realm of thought, understanding the ludic fallacy is crucial; it reveals how we often misinterpret things like chance and risk, much like a coin fair.

| Aspect | Games | Real Life |

|---|---|---|

| Rules | Fixed & predictable | Fluid & unknown |

| Uncertainty | Controlled odds | Chaotic variables |

| Outcomes | Repeatable | Unique events |

Nassim Taleb and the Warning Against Simplistic Models

Nassim Taleb’s analysis of Black Swans shows why rigid systems fail. Banks once treated markets like roulette wheels—until the 2008 crash proved their models couldn’t handle rare disasters. As Taleb argues, “The future laughs at our spreadsheets.”

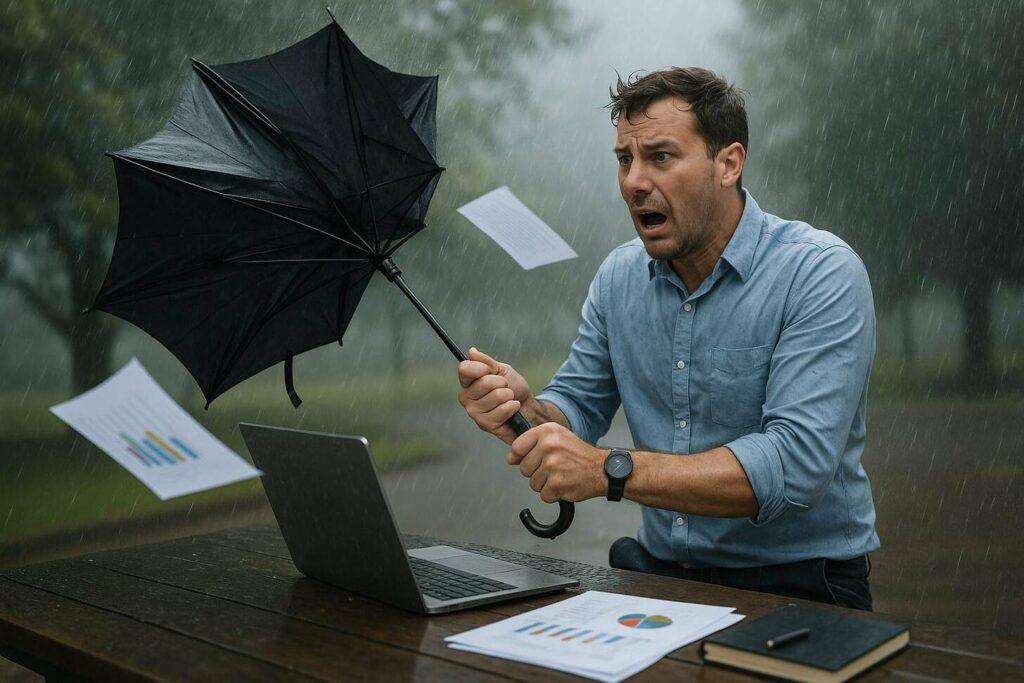

Ever planned a picnic ruined by rain? That’s everyday proof. Real thinking means preparing for multiple scenarios, not betting on single odds. Flexibility beats false confidence every time.

Roots and Evolution of the Ludic Fallacy

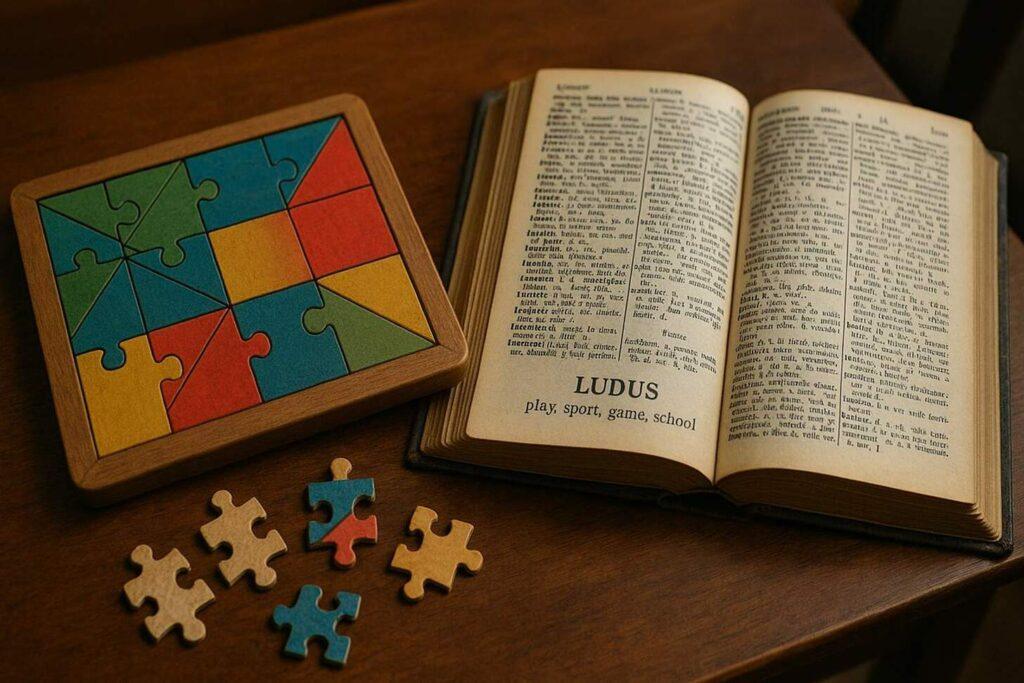

The term “ludic” isn’t just about play—it’s rooted in ancient history. Latin thinkers used ludus to describe structured games with clear rules. But life? It’s never that simple. This gap between controlled systems and messy reality shapes how we misunderstand risk.

Latin Origins and the Concept of “Ludes”

Ever wonder why we call puzzles “games”? The Latin word ludus—meaning play or sport—laid the groundwork.

Ancient Romans used it for theater, gladiator matches, and board games. Over time, this idea of structured competition seeped into how we view the world.

Taleb spotted a problem. In his book The Black Swan, he argues that treating life like a ludus ignores wild variables. Think of it this way: Chess has fixed moves, but real-world storms or stock crashes? They laugh at rules.

Taleb’s Journey from Theory to Real-World Application

How did a Wall Street trader become a critic of models? Taleb’s early career exposed him to flawed financial formulas.

After the 2008 crash, his warnings about rigid systems gained traction. His work shifted from abstract math to practical guides for navigating chaos.

Why does this matter today? As historical analysis of games and systems shows, clinging to old rules invites disaster. Modern risk assessment needs flexibility—like preparing for both rain and sunshine, even if the forecast says “clear skies.”

The Ludic Fallacy Mental Model in Decisions

Ever bet on a coin flip and felt certain of the outcome? That’s game logic at work—a system of rules and odds we trust too easily. But life doesn’t flip coins.

It throws hurricanes, pandemics, and stock market crashes. This gap between controlled games and messy reality is where bad decisions thrive.

When Predictable Systems Meet Unpredictable Outcomes

Casinos love games because they control the variables. A coin has two sides, and roulette wheels have fixed slots.

But what happens when we apply this thinking to real life? Banks learned this lesson in 2008. They treated mortgages like blackjack hands—until housing markets crashed like a drunk roulette ball.

| Factor | Games | Real World |

|---|---|---|

| Variables | Known (e.g., 52 cards) | Unknown (e.g., new viruses) |

| Outcomes | Limited (win/lose) | Endless possibilities |

| Surprises | Rare (house always wins) | Common (Black Swans) |

Taleb’s coin flip example says it all. Flip a coin 100 times in a game—you’ll see patterns. Flip it in real life? A bird might snatch it mid-air. That’s chaos laughing at your probability charts.

So how do we adapt? Start by asking: “What’s the weirdest thing that could happen here?” Prepare for rain at picnics, backup plans for job losses, and savings for market crashes. Ditch the spreadsheets. Embrace the storm.

Games vs. Reality: Whats the Difference?

Imagine planning a perfect road trip using a board game’s rulebook. Sounds ridiculous, right? Yet we often treat real-world challenges like predictable games with fixed outcomes, falling into the ludic fallacy.

Let’s explore examples of why this approach fails when life throws curveballs, like when the coin lands on heads unexpectedly.

When Predictable Systems Meet Unpredictable Outcomes

Casinos thrive on controlled rules. Blackjack dealers follow exact protocols, and slot machines use programmed odds. But step outside the casino doors?

A sudden job loss or global pandemic can’t be solved with a dealer’s handbook. This gap between structured games and messy reality explains why financial models crumble during crises.

| Factor | Games | Real World |

|---|---|---|

| Control | Full (e.g., dice rolls) | Partial (weather, markets) |

| Surprises | Rare (house edge) | Frequent (new tech, disasters) |

| Adaptation | Fixed strategies | Constant adjustments |

Sports offer another clue. Football teams practice plays for known scenarios—but what if a thunderstorm hits during the Super Bowl? Real life doesn’t pause for weather delays. As practical frameworks show, success requires embracing uncertainty, not fighting it.

Here’s the twist: Thinking like a game master might help you win Monopoly, but it won’t prepare you for a housing market crash.

Next time you face a big decision, ask: “What invisible chaos could change everything?” Sometimes the best move is tossing the rulebook altogether.

Financial Lessons From The 2008 Crisis

Picture a poker player betting their life savings on a “sure win” hand. That’s how banks approached risk before 2008—using models that treated markets like predictable card games.

The result? A $15 trillion global meltdown. Let’s unpack how overconfidence in flawed systems turned finance into a house of cards.

Value at Risk (VaR) Models and Underestimated Risks

Banks loved VaR models because they calculated worst-case losses using past data. But these formulas ignored rare disasters. Imagine a weather app that only shows sunny days.

When the 2008 storm hit, VaR predicted 1% odds of catastrophe. Reality? The IMF later found these models missed 71% of actual losses.

The Role of “Black Swans” in Market Collapse

What happens when you mix mortgage bets with once-in-a-lifetime Black Swan events? Chaos. Housing prices crashed like a rigged roulette wheel. Banks had no backup plans for this uncertainty.

As losses piled up, even experts realized their probability charts were useless against real-world variables.

Here’s the kicker: Life isn’t a game with fixed odds. The 2009 IMF report proved that clinging to neat formulas invites disaster.

Next time someone says “trust the model,” ask: “What invisible monsters are hiding in the data?” Sometimes, the world rewards those who prepare for surprises—not spreadsheets.

The Hidden Dangers of Simplified Models

Ever built a house on cracked foundations? That’s what happens when we trust models that ignore rare but earth-shaking events. These frameworks work like narrow flashlights—bright for familiar paths, blind to lurking tigers in the shadows.

Ludic Fallacy Mental Model: Ignoring Fat Tails and Rare Events

Picture flipping a coin 1,000 times. Standard models predict heads or tails. But what if it lands on its edge? That’s a “fat tail”—a rare outcome most systems dismiss.

In finance, this thinking led to 2008’s meltdown. Banks assumed housing markets would follow tidy patterns, not crash like a drunken Jenga tower.

Why does this matter? Life isn’t a coin flip. A gambler’s fallacy mindset tricks us into believing past outcomes predict future uncertainty. Real variables—like sudden tech breakthroughs or pandemics—don’t fit spreadsheet columns.

As Taleb warns, “What’s never happened before happens all the time.”

| Model Assumptions | Real-World Truths |

|---|---|

| Predictable patterns | Chaotic surprises |

| Fixed probabilities | Shifting odds |

| Controlled inputs | Hidden randomness |

Here’s the fix: Treat models as rough sketches, not blueprints. Build buffers for freak storms—literal and financial. Ask: “What’s the weirdest thing that could wreck this plan?” Sometimes survival means expecting the coin to stand upright.

Strategies for Embracing Uncertainty

What if your best-laid plans got flipped by a surprise storm? Life’s twists demand more than spreadsheets and checklists. Let’s explore tools to thrive when chaos hits—no crystal ball required.

Developing Critical Thinking in Complex Environments

Start by questioning every assumption. When planning a career move, ask: “What unexpected factors could change this industry?” Treat probability as a range, not a fixed number. For example:

| Traditional Approach | Adaptive Thinking |

|---|---|

| “There’s a 10% chance of failure” | “How would we handle three simultaneous crises?” |

| Relying on past data | Testing worst-case scenarios |

| Single-point forecasts | Preparing multiple backup plans |

Try this exercise: List five wild risks for your next big decision. Could a new law disrupt your business? Might AI reshape your job? This stretches your thinking beyond routine rules.

Building Resilience Through Diverse Perspectives

Form a “chaos committee” with people from different backgrounds. A teacher, engineer, and artist will spot uncertainty you’d miss alone. When COVID hit, companies with cross-functional teams adapted fastest to remote work and supply chain shocks.

Here’s a quick practice: Before major choices, ask three people: “What’s the weirdest thing that could go wrong?” Their answers reveal blind spots in your models. Remember—the world rewards those ready for rainbows and thunderstorms.

Bridging Taleb’s Theories with Modern Risk Management

How do modern risk managers sleep at night when the next crisis could be around the corner? Nassim Taleb’s ideas offer a flashlight in the dark—not to predict storms, but to build sturdier shelters. His work pushes industries to rethink rigid models and embrace the messy real life variables that break spreadsheets.

Integrating Insights in Finance and Beyond

Meet two fictional thinkers: Dr. John and Fat Tony. Dr. John trusts probability charts and historical data. Fat Tony sniffs out hidden assumption flaws, asking, “What’s the casino not telling us?” Their approaches reveal a timeless tension:

| Dr. John (Analytical) | Fat Tony (Intuitive) |

|---|---|

| Relies on past data | Looks for real-world cracks |

| Uses fixed rules | Adapts to shifting odds |

| Fears black swan events | Prepares for them |

Modern firms blend both styles. Banks now stress-test portfolios against rare disasters, not just common hiccups. Tech companies hire “chaos engineers” to crash their own systems—finding weak spots before hackers do.

Here’s the twist: You don’t need a Wall Street budget to apply this. Next time you plan a career move or investment, ask: “What would Fat Tony spot that I’m missing?” Sometimes the best thinking happens when you step away from the spreadsheet—and into the unpredictable world.

Conclusion

Have you ever trusted a map that led you nowhere? That’s the danger of treating life like a predictable game. The 2008 financial crisis—where banks lost trillions clinging to flawed models—shows how easily understanding mental frameworks can save us from costly mistakes.

Nassim Taleb’s work reminds us: Real thinking means preparing for storms and sunshine. While spreadsheets love tidy rules, the world thrives on surprises. Remember the housing crash? Experts missed it because their models ignored rare “black swan” events.

Here’s the takeaway: Ditch the rulebook. Build plans that bend instead of break. Ask: “What invisible risks might my spreadsheet miss today?” Whether managing money or career moves, leave room for chaos.

Ready to rethink how you handle uncertainty? Start small. Review one assumption this week. Could a wildcard event change everything? Life isn’t checkers—it’s improv theater with hidden trapdoors. Embrace the mess, and you’ll dance through the surprises.